Services description

Let’s understand the environment we currently have and take a look at the deployed services.

To see the pods you can either look at the OpenShift Console or use oc get deployment -n retail.

|

These are the 11 services currently deployed:

|

Type |

Description |

|

Persistence |

PostgreSQL database for cashback related information. |

|

PostgreSQL database used by the legacy services; |

|

|

Integration |

Kafka connectors for database event streaming (debezium); |

|

Camel + Quarkus service for event-driven processing of expenses and customers; |

|

|

Camel + Quarkus service for event-driven synchronization of product data with ElasticSearch; |

|

|

Quarkus + Kafka Streams event-driven service for purchase data (sales data) aggregation and synchronization; |

|

|

Quarkus + Camel event-driven service responsible for calculating and maintaining cashback data up-to-date in the new database; |

|

|

Data simulation |

a Quarkus application that allows simulating a pre-selected number of purchases in the retail database; |

|

Data Visualization |

a kafka client ui to facilitate the visualization of events and topics; |

|

Quarkus + Panache back-end service to facilitate the visualization of cashback information; |

|

|

Quarkus + ElasticSearch extension to simplify the visualization of the indexed data residing in elastic search; |

Components configuration

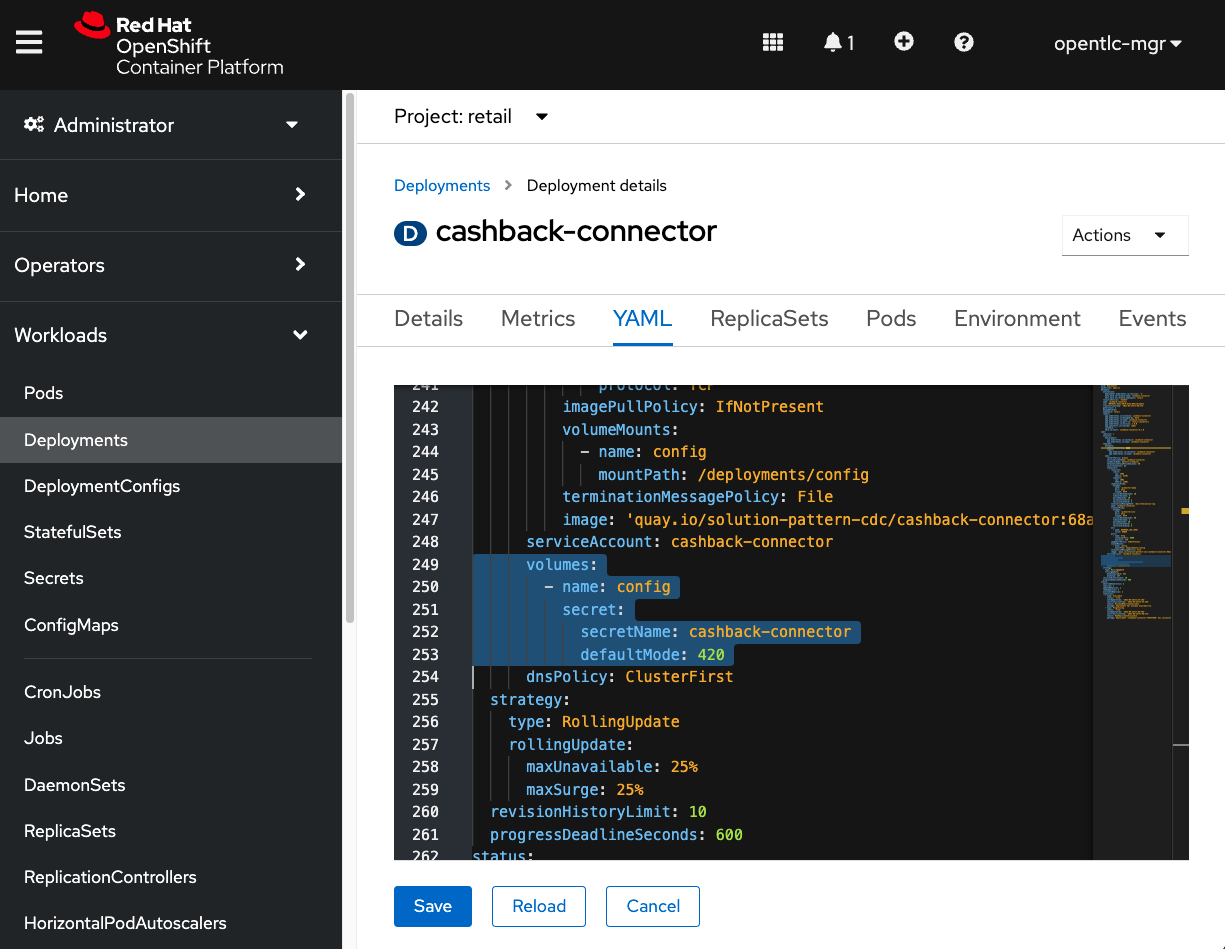

All the customization of the services is externalized using OpenShift secrets. As an example, let’s check the connection information for the cashback-connector service.

-

Navigating to Workloads → Deployments → cashback-connector ⇒ YAML, the following configuration section can be visualized:

-

In order to find out exactly which configuration values are being used by the cashback-connector service, let’s take a look at the configured secret. On the left menu navigate to Workloads → Secrets

-

In the filter, search by the name

cashback-connectorand select the secret: -

Scroll to the bottom of the page and click on Reveal values, located in the Data section.

-

The parameters you used in your Ansible provisioning inventory was used by Helm charts in order to generate these final values, which are a mix of default template values, plus your custom configuration.

-

This service is configured to:

-

Connect to the Kafka bootstrap server;

-

Subscribe to the topics

retail.sale-aggregatedandretail.updates.public.customer; -

Be a publisher of events on the topic

retail.expense-event; -

Connect to the cashback-db, a postgresql database;

-

-

Additional information

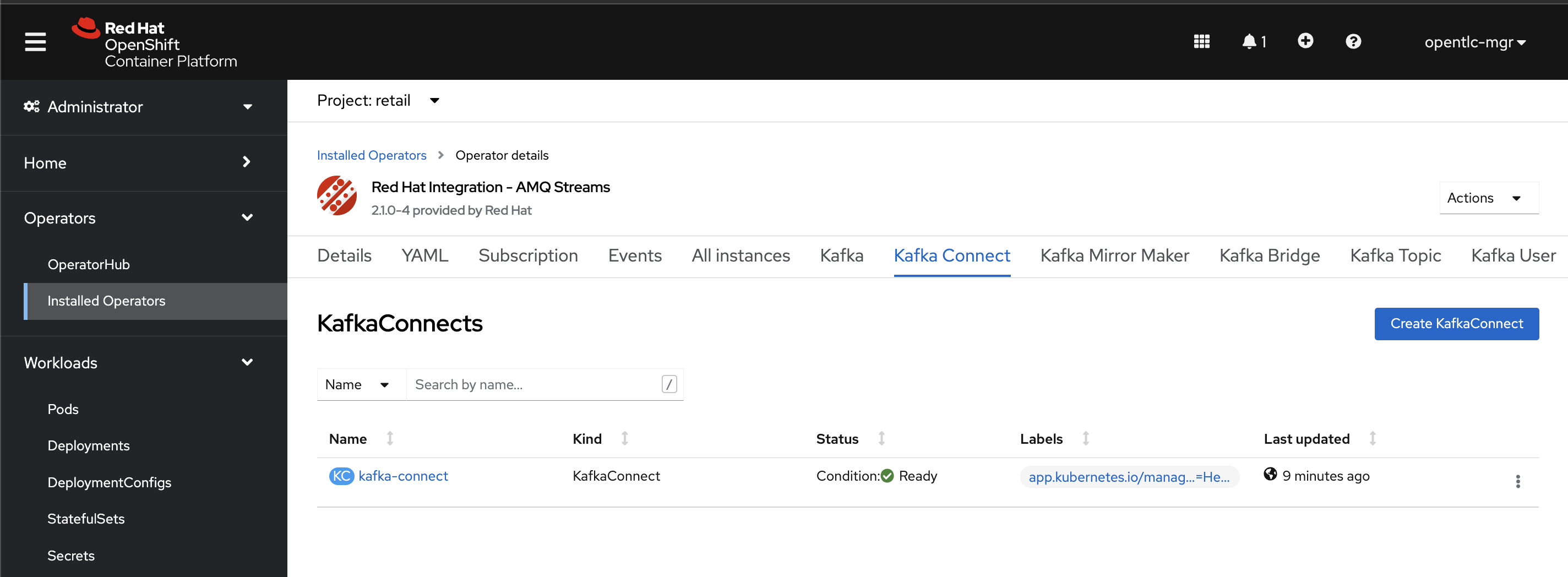

Kafka Connect - Debezium installation

Debezium is provisioned on the cluster using Helm and the Strimzi operator.