Solution Pattern: Accelerate time to value with Red Hat Openshift Service on AWS

See the Solution in Action

2. ROSA integration with AWS services:

Here we will see how easily we can integrate AWS services with ROSA. Below are the walkthrough guides for integrating ROSA with different AWS services.

2.1. CloudWatch

We can easily set up ROSA to forward logs to Cloudwatch and view those logs from there. Below are the steps used to configure the Cloudwatch logging with STS:

-

Run the following commands to get the environment variables setup:

export ROSA_CLUSTER_NAME=$(oc get infrastructure cluster -o=jsonpath="{.status.infrastructureName}" | sed 's/-[a-z0-9]\+$//') export REGION=$(rosa describe cluster -c ${ROSA_CLUSTER_NAME} --output json | jq -r .region.id) export OIDC_ENDPOINT=$(oc get authentication.config.openshift.io cluster -o json | jq -r .spec.serviceAccountIssuer | sed 's|^https://||') export AWS_ACCOUNT_ID=`aws sts get-caller-identity --query Account --output text` export AWS_PAGER="" export SCRATCH="/tmp/${ROSA_CLUSTER_NAME}/clf-cloudwatch-sts" mkdir -p ${SCRATCH} echo "Cluster: ${ROSA_CLUSTER_NAME}, Region: ${REGION}, OIDC Endpoint: ${OIDC_ENDPOINT}, AWS Account ID: ${AWS_ACCOUNT_ID}" -

Create an IAM policy and Role to be used by ClusterLogForwarder. Attach the IAM policy to the role.

POLICY_ARN=$(aws iam list-policies --query "Policies[?PolicyName=='RosaCloudWatch'].{ARN:Arn}" --output text) if [[ -z "${POLICY_ARN}" ]]; then cat << EOF > ${SCRATCH}/policy.json { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "logs:CreateLogGroup", "logs:CreateLogStream", "logs:DescribeLogGroups", "logs:DescribeLogStreams", "logs:PutLogEvents", "logs:PutRetentionPolicy" ], "Resource": "arn:aws:logs:*:*:*" } ] } EOF POLICY_ARN=$(aws iam create-policy --policy-name "RosaCloudWatch" \ --policy-document file:///${SCRATCH}/policy.json --query Policy.Arn --output text) fi echo ${POLICY_ARN} cat <<EOF > ${SCRATCH}/trust-policy.json { "Version": "2012-10-17", "Statement": [{ "Effect": "Allow", "Principal": { "Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_ENDPOINT}" }, "Action": "sts:AssumeRoleWithWebIdentity", "Condition": { "StringEquals": { "${OIDC_ENDPOINT}:sub": "system:serviceaccount:openshift-logging:logcollector" } } }] } EOF ROLE_ARN=$(aws iam create-role --role-name "${ROSA_CLUSTER_NAME}-RosaCloudWatch" \ --assume-role-policy-document file://${SCRATCH}/trust-policy.json \ --query Role.Arn --output text) echo ${ROLE_ARN} aws iam attach-role-policy --role-name "${ROSA_CLUSTER_NAME}-RosaCloudWatch" \ --policy-arn ${POLICY_ARN} -

Deploy the Cluster Logging operator using the below commands:

cat << EOF | oc apply -f - apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: labels: operators.coreos.com/cluster-logging.openshift-logging: "" name: cluster-logging namespace: openshift-logging spec: channel: stable installPlanApproval: Automatic name: cluster-logging source: redhat-operators sourceNamespace: openshift-marketplace EOF -

Create a secret which will be used by cluster log forwarding resource:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: cloudwatch-credentials namespace: openshift-logging stringData: role_arn: $ROLE_ARN EOF -

Create cluster log forwarding resource

cat << EOF | oc apply -f - apiVersion: "logging.openshift.io/v1" kind: ClusterLogForwarder metadata: name: instance namespace: openshift-logging spec: outputs: - name: cw type: cloudwatch cloudwatch: groupBy: namespaceName groupPrefix: rosa-${ROSA_CLUSTER_NAME} region: ${REGION} secret: name: cloudwatch-credentials pipelines: - name: to-cloudwatch inputRefs: - infrastructure - audit - application outputRefs: - cw EOF -

Create a cluster logging resource

cat << EOF | oc apply -f - apiVersion: logging.openshift.io/v1 kind: ClusterLogging Metadata: name: instance namespace: openshift-logging spec: collection: logs: type: fluentd forwarder: fluentd: {} managementState: Managed EOF -

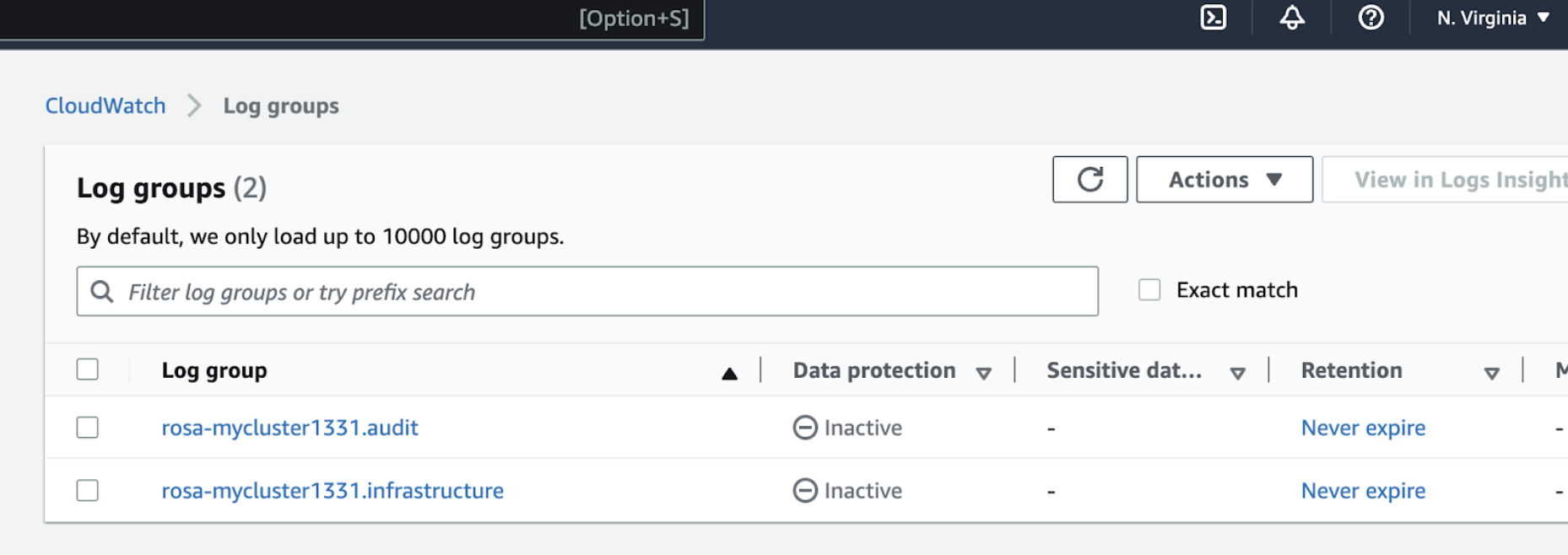

Now we can check the logs in Cloudwatch console, as shown in the below image. In this image, mycluster1331 is the name of ROSA cluster used here for demonstration.

2.2. IAM (STS only)

-

What is AWS STS:

AWS provides AWS Security Token Service (AWS STS) as a web service that enables us to request temporary, limited-privilege credentials for users or for users we authenticate (federated users). In this case AWS STS can be used to grant the ROSA service, limited, short term access, to resources in your AWS account. After these credentials expire (typically an hour after being requested), they are no longer recognized by AWS and they no longer have any kind of account access from API requests made with them.

-

What is OIDC:

OIDC provides a mechanism for cluster operators to authenticate with AWS in order to assume the cluster roles (via a trust policy) and obtain temporary credentials from STS in order to make the required API calls.

-

How secure is AWS STS:

-

Explicit and limited set of roles and policies - the user creates them ahead of time and knows exactly every permission asked for and every role used.

-

The service cannot do anything outside of those permissions.

-

Whenever the service needs to perform an action it obtains credentials that will expire in at most 1 hour. Meaning there is no need to rotate or revoke credentials and their expiration reduces the risks of credentials leaking and being reused.

-

-

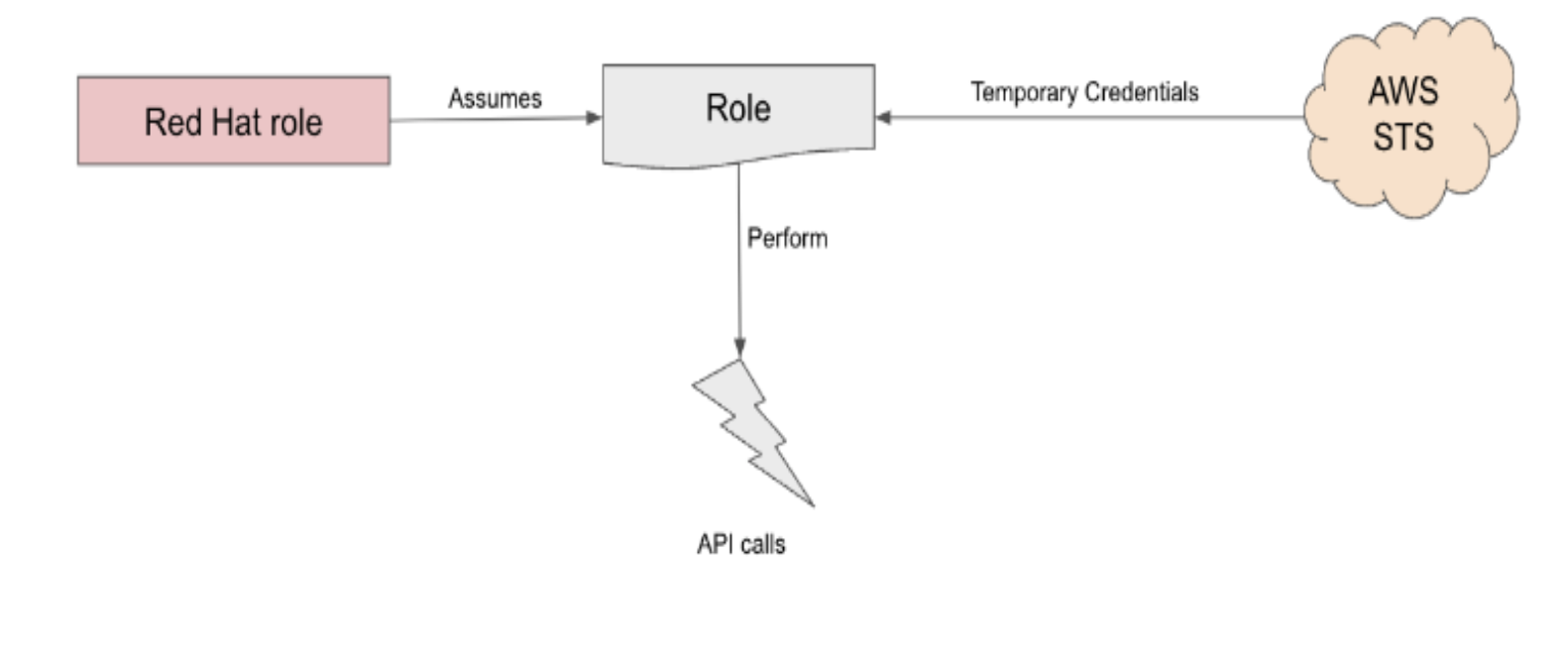

How does ROSA work with STS:

Assuming we have a ROSA cluster with STS in place. When a role is needed, the workload, currently using the Red Hat role, will assume the role in the AWS account, obtain temporary credentials from AWS STS and begin performing the actions (via API calls) within the customer’s AWS account as permitted by the assumed role’s permissions policy. It should be noted that these credentials are temporary and have a maximum duration of 1 hour.

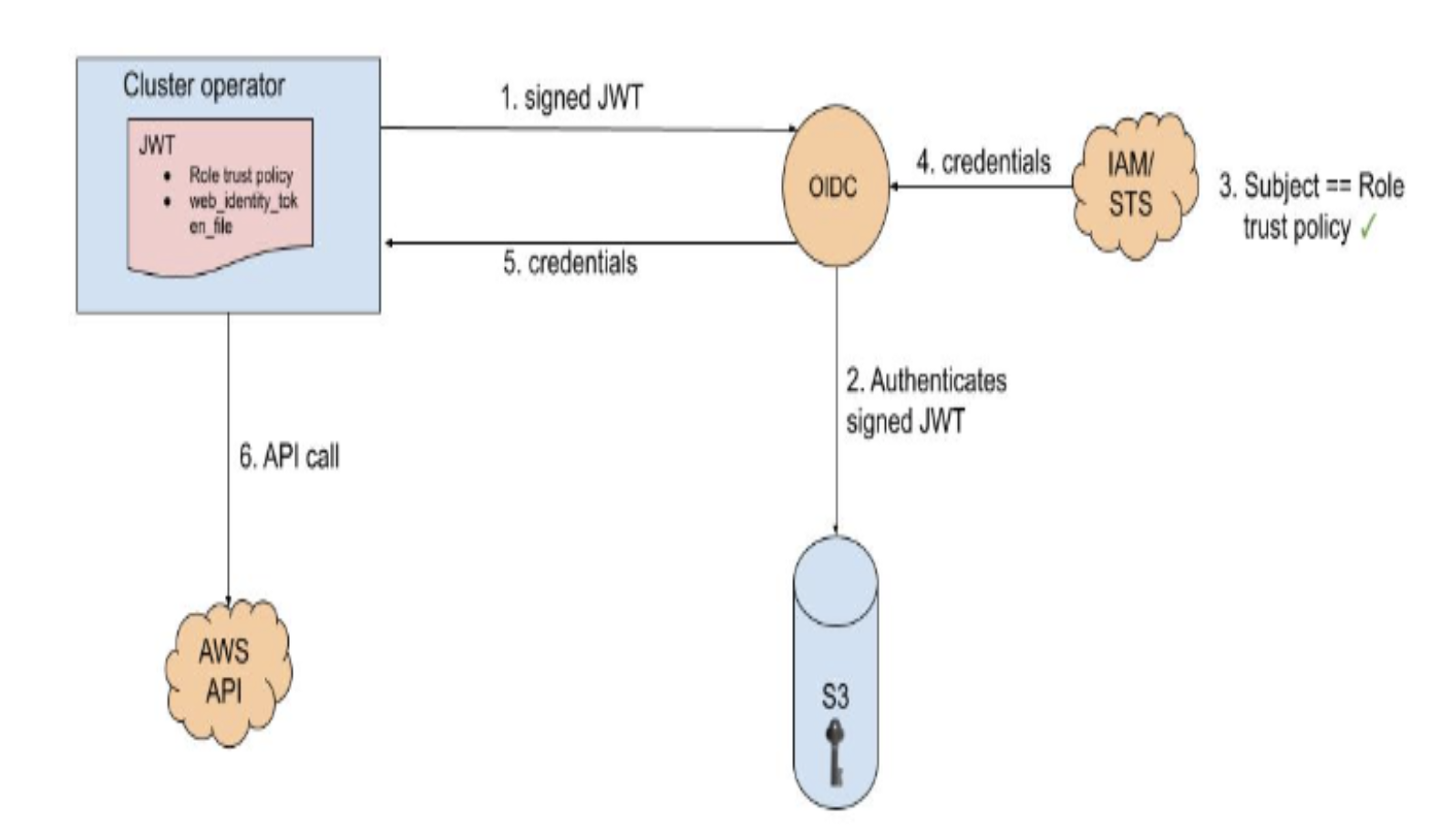

In addition to the above, Operators in the cluster use the following process to get credentials in order to perform their required tasks. Each operator has an operator role, a permissions policy and a trust policy with an OIDC provider. The operator will assume-role by passing a JWT that contains the role and a token file (web_identity_token_file) to the OIDC provider, which then authenticates the signed key with a public key (created at cluster creation time and was stored in an S3 bucket). It will then confirm that the subject in the signed token file that was sent matches the role in the role trust policy (this way we ensure you only can get the role allowed). Then it will return the temporary credentials to the operator so that it can make AWS API calls. Here is an illustration of this concept:

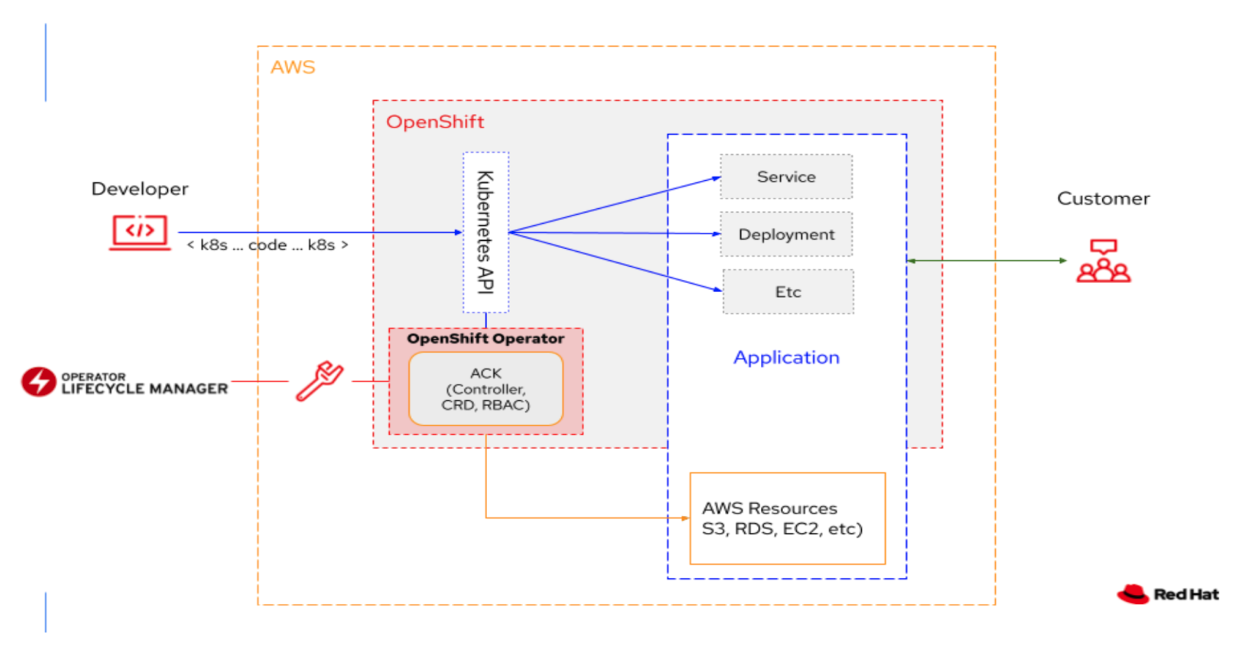

2.3. ACK (AWS Controllers for Kubernetes):

AWS Controllers for Kubernetes (ACK) is an open-source project which defines a framework to build custom controllers (or Kubernetes Operators) for AWS services. These custom controllers enable developers to define, create, deploy, control, update, delete, and manage Amazon services directly from inside the Kubernetes clusters. So, instead of separately managing the containerized applications on Kubernetes clusters, and the associated AWS services (eg. S3 bucket, database, ElastiCache etc), ACK controllers now allow centralized management through the Kubernetes CLI. Developers can now manage both Kubernetes-native applications as well as the resources the application depends on, using the same Kubernetes API.

Here’s an example of a Postgres RDS database. Notice that the password is sourced from a Kubernetes Secret named “rds-postgresql-user-creds” in the “production” namespace. The application also running in the namespace can also import this secret to connect to the database.

apiVersion: rds.services.k8s.aws/v1alpha1

kind: DBInstance

metadata:

name: example-database

spec:

allocatedStorage: 20

dbInstanceClass: db.t3.micro

dbInstanceIdentifier: example-database

engine: postgres

engineVersion: "14"

masterUsername: myusername

masterUserPassword:

namespace: production

name: rds-postgresql-user-creds

key: passwordThese ACK controllers help developers save time as now they can provision and manage AWS resources directly from inside their Kubernetes cluster. This allows them to focus on code and delivering business value quickly, instead of wasting time managing Kubernetes and AWS resources separately. Being able to access and manage resources from inside the Kubernetes cluster also lends itself well to applying the GitOps principles with mixed container and cloud resources. It also helps give them visibility into the entire application stack, as well as giving them a smoother experience overall.

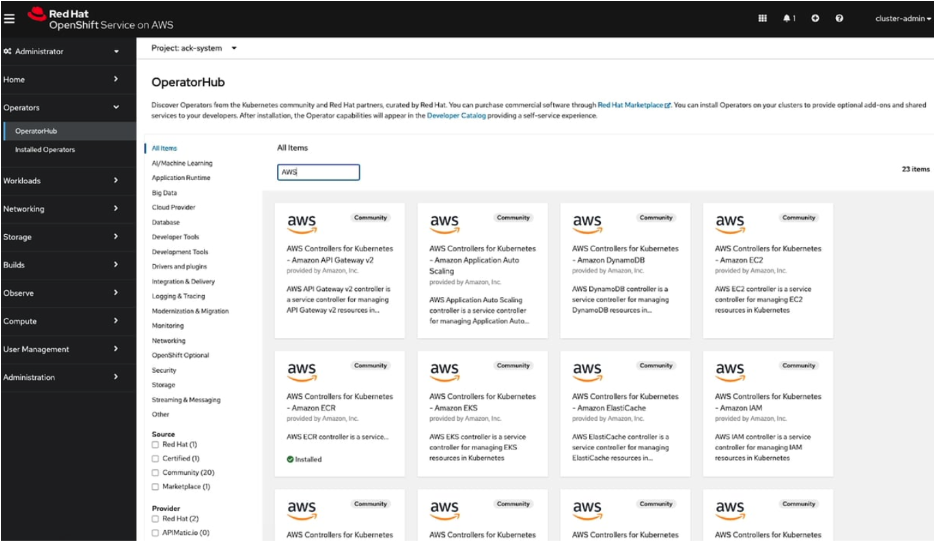

The cool part is that these ACK Controllers are now available in Red Hat OpenShift and Red Hat OpenShift Service on AWS (ROSA), as a result of the deep collaboration between AWS and Red Hat.

OpenShift and ROSA customers who have an AWS account and use AWS resources (S3 buckets, ElastiCache, DynamoDB etc.) can now access these ACK controllers and manage their services from inside their OpenShift and ROSA clusters! They can install the AWS service-specific controller into the cluster and start managing the resources and create more resources right in the cluster, making their OpenShift experience very consistent, regardless of the task. These SaaS Operators further open up newer ways to consume OpenShift and a variety of services that applications will need from other cloud providers. It helps provide developers a truly hybrid experience. Red Hat and AWS engineers helped automate the generation of a lot of these operators that are now visible in OpenShift and ROSA.

2.4. Amazon S3(Simple Storage Service)

IAM supports federated identities using an OpenID Connect (OIDC) identity provider. This feature allows customers to authenticate AWS API calls with supported identity providers and receive a valid OIDC JSON web token (JWT). PODs can request and pass this token to the AWS STS AssumeRoleWithWebIdentityAPI operation and receive temporary IAM role credentials.

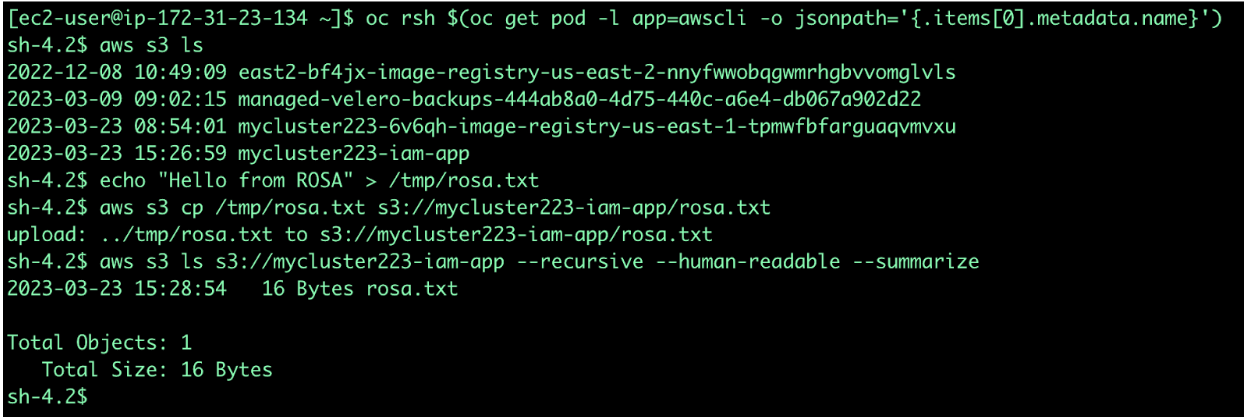

Here we will see how ROSA with STS enabled can use IAM roles for service accounts to provide access for a Kubernetes pod to an Amazon Simple Storage Service (Amazon S3) bucket.

-

Run the following commands to create a service account and associate an IAM role to it. Here my ROSA Cluster name is mycluster223.

export ROSA_CLUSTER_NAME=mycluster223 export SERVICE="s3" export AWS_REGION=us-east-1 export AWS_ACCOUNT_ID=$(aws sts get-caller-identity --query Account --output text) export APP_NAMESPACE=iam-app export APP_SERVICE_ACCOUNT_NAME=iam-app-$SERVICE-sa export OIDC_PROVIDER=$(oc get authentication.config.openshift.io cluster -o json | jq -r .spec.serviceAccountIssuer | sed 's/https:\/\///') export OIDC_PROVIDER=$(oc get authentication.config.openshift.io cluster -ojson | jq -r .spec.serviceAccountIssuer | sed 's/https:\/\///') oc new-project $APP_NAMESPACE oc create serviceaccount $APP_SERVICE_ACCOUNT_NAME -n $APP_NAMESPACE $ cat <<EOF > ./trust.json { "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Federated": "arn:aws:iam::${AWS_ACCOUNT_ID}:oidc-provider/${OIDC_PROVIDER}" }, "Action": "sts:AssumeRoleWithWebIdentity", "Condition": { "StringEquals": { "${OIDC_PROVIDER}:sub": "system:serviceaccount:${APP_NAMESPACE}:${APP_SERVICE_ACCOUNT_NAME}" } } } ] } EOF export APP_IAM_ROLE="iam-app-${SERVICE}-role" export APP_IAM_ROLE_ROLE_DESCRIPTION='IRSA role for APP $SERVICE deployment on ROSA cluster' aws iam create-role --role-name "${APP_IAM_ROLE}" --assume-role-policy-document file://trust.json --description "${APP_IAM_ROLE_ROLE_DESCRIPTION}" APP_IAM_ROLE_ARN=$(aws iam get-role --role-name=$APP_IAM_ROLE --query Role.Arn --output text) POLICY_ARN=$(aws iam list-policies --query 'Policies[?PolicyName==`AmazonS3FullAccess`].Arn' --output text) aws iam attach-role-policy --role-name "${APP_IAM_ROLE}" --policy-arn "${POLICY_ARN}" > /dev/null export IRSA_ROLE_ARN=eks.amazonaws.com/role-arn=$APP_IAM_ROLE_ARN oc annotate serviceaccount -n $APP_NAMESPACE $APP_SERVICE_ACCOUNT_NAME $IRSA_ROLE_ARN -

We can describe the service account to see the details:

oc describe serviceaccount $APP_SERVICE_ACCOUNT_NAME -n $APP_NAMESPACE -

Now we will deploy one deployment to test the S3 access:

cat <<EOF | oc create -f - apiVersion: apps/v1 kind: Deployment metadata: namespace: ${APP_NAMESPACE} name: awscli labels: app: awscli spec: replicas: 1 selector: matchLabels: app: awscli template: metadata: labels: app: awscli spec: containers: - image: amazon/aws-cli:latest name: awscli command: - /bin/sh - "-c" - while true; do sleep 10; done env: - name: HOME value: /tmp serviceAccount: ${APP_SERVICE_ACCOUNT_NAME} EOFWhen using a Web Identity, the AWS clients and SDKs look for environment variables called AWS_WEB_IDENTITY_TOKEN_FILE and AWS_ROLE_ARN. For the Python Boto3 SDK, this is documented here.

The EKS Pod Identity Webhook running in the OpenShift cluster can place these variables within a running pod for us as long as a service account with an IAM role defined has been attached to your pod. For the demonstration: workload running in our ROSA cluster, we can see these variables have been successfully inserted into the pod by running:

oc describe pod $(oc get pod -l app=awscli -o jsonpath='{.items[0].metadata.name}') | grep "^\s*AWS_" -

Create a S3 bucket(may be from AWS Console or a terminal outside ROSA):

export S3_BUCKET_NAME=$ROSA_CLUSTER_NAME-iam-app aws s3api create-bucket --bucket $S3_BUCKET_NAME --region $AWS_REGION --create-bucket-configuration LocationConstraint=$AWS_REGION > /dev/nullNow we will get the running pod and use that to access the S3 created in the previous step. This screenshot shows us what it will look like.